Review – After the Spike: Population, Progress, and the Case for People

After the Spike: Population, Progress, and the Case for People (by Dean Spears & Michael Geruso; published 8 July 2025) is a pro-natalist, pro-progress argument aimed largely at people who have concerns about overpopulation. The authors claim the world is heading towards a population peak (they refer to this as ‘the spike’) followed by sustained depopulation, driven by fertility rates that have fallen below replacement across much of the world.

Their core normative stance is simple: more people living good lives is (all else equal) a good thing, and a shrinking humanity is not a win.

We are reading this book as part of an EA book club in Melbourne, Australia – so I thought I’d publish my thoughts.

They emphasise that fertility decline is now widespread (not just a niche Japan or Korea problem).

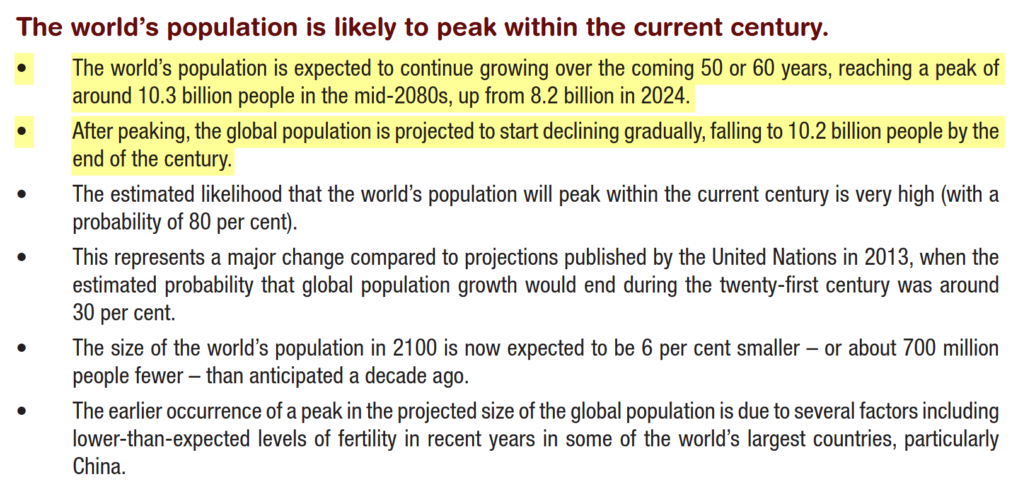

They push a long-run extrapolation1: if the world eventually looks like today’s low-fertility places (many countries with ~1-2 children per woman), population will not stabilise, but keep shrinking. In the book’s opening framing, they postulate that from a peak around ~10 billion could, there could be a fall below ~2 billion within roughly three centuries. However, critics argue the book overstates the depopulation threat (at least on the UN’s central scenario) and risks fuelling a false panic.2

They authors want progress-minded people to treat stable population (via better conditions for wanted children) as a serious public good because in their view, people are the source of progress, and progress is how we solve the problems people worry about. All well and good. However, as a technoprogressive who is bullish on AI superintelligence (SI) in the short to medium term, it may be that human headcount stops being the bottleneck for ideas -> progress -> solutions to problems.

What about Near-term Superintelligence?

Before SI, the rough intuition is:

more people -> more researchers/entrepreneurs ->more ideas + more trials -> more progress

With SI, assuming it’s under our control we can spin up (or rent) vast amounts of cognitive labour. So the engine becomes:

- Number of AI research agents you can run in parallel

- Their quality (reasoning, creativity, long-horizon planning, scientific taste)

- Compute + energy + capital to run them

- Access to real-world experiments (labs, factories, clinical trials, field tests)

So, innovation scales less with human population and more with compute, experimental throughput, and deployment capacity.

What if we get SI, but not robotics?

Even with SI doing the intellectual heavy lifting, human population still matters via constraints SI can’t magic away interacting with the real world – which effects implementation throughput (building fabs, power plants, robots, supply chains, housing, hospitals, etc. all this is still physical), and the need to harvest energy, minerals, factories and the logistics of ferrying stuff about. As well as experimentation capacity (wet-labs, benchwork, real-world testing is still, without advanced robotics, a human dominated domain. Also with medicine, more population means more bodies to test on (ethics permitting of course).

Larger human pops also generate demand scaling for bigger markets, with more varied and nuanced needs – historically this as motivated product innovation – and with less population more niches get pruned, less economies of scale because industries can’t amortise fixed costs as easily.

What if SI turns out to be morally unreliable?

Arguably humanity is well served by a diversity of values and oversight. If SI is powerful, the risk isn’t so much running out of ideas – optimising the wrong things may be catastrophic.3 More people can mean more pluralism, legitimacy, contestation, and “immune system” capacity against capture by a small coalition – assuming democracy remains intact.

The uncomfortable point: SI can make humans irrelevant to progress, not to value

If SI arrives and is not aligned, progress could accelerate while human welfare stagnates or worsens. In that world, Spears/Geruso’s argument about population sustaining progress becomes beside the point: the central variable becomes alignment and governance, not demography.

Assuming SI and alife is not sentient, we can mount two different claims:

- Positive claim (innovation mechanics): progress won’t need lots of humans once SI exists.

- Normative claim (what matters): more humans can still be good because they’re additional welfare subjects (not because they are drivers of R&D). This also assumes that human lives are and remain reliably net positive.

If you’re a total utilitarian, you can accept (2) even while rejecting the instrumental version of (1).

Though I argue elsewhere that AI may become sentient, and that SI may be more moral than us.

Three plausible regime outcomes

Regime 1: AI as accelerator for human civilisation (assuming aligned and widely deployed). Innovation rate scales with compute + lab automation + regulation speed. As such human population matters less for discovery, and humans are still useful for deployment (while robotics is still inadequate), governance (while humans are more morally capable etc), and beneficiaries (though AI may be too if/when it becomes sentient/morally relevant).

Regime 2: Centralised SI (few actors control it, or it controls itself). Progress is extremely fast, but narrow and path-dependent. Population size matters mainly for political resistance, legitimacy, and avoiding oligarchic lock-in, or an AI takeover.

Regime 3: AI-heavy automated economy where robots do implementation too. Even physical constraints loosen. Then demography matters mostly for value aggregation (whose preferences count) and for ethical reasons (how many lives exist).

Takeaways

It’s tempting to think that if SI is coming, population size doesn’t matter. However SI timelines are uncertain and fertility trends are known to operate over decades – and as such an all in gamble over civilisation’s long-run trajectory on a contested forecast is not clever.

So under certain assumptions:

- More people -> more potential welfare (if lives are good)

- More people -> higher stake in alignment and governance

- Progress under SI -> scales with compute/experiments/deployment

That’s it for now, I intend to update this post as the book club progresses.

Footnotes

- Which disagrees with the UN projections. UN projections do not currently forecast a near-term cliff by 2100. The UN’s World Population Prospects 2024 projects a peak around the mid-2080s (~10.3b) and then only a modest decline to ~10.2b by 2100. ↩︎

- See ‘After the Spike and the Myth of Depopulation‘ at Population Matters: “After the Spike by Dean Spears and Michael Geruso focuses on depopulation, but its arguments are built on shaky ground, stirring up fear, rather than focusing on the facts. What’s more troubling is the authors don’t disclose their funding from Elon Musk, who has frequently made headlines with his concern over low birth rates.” ↩︎

- SI may optimise something weird and unrelated and inimicable to human flourishing, or optimise on the proxy of a value instead of the value itself. ↩︎