Metacognition in Large Language Models

Do LLMs have metacognition? What happens when machines begin to “think about thinking”?

At Future Day 2025, we hosted an in-depth discussion on metacognition in large language models (LLMs) – with insights from researcher Shun Yoshizawa, supervised by neuroscientist Dr Ken Mogi at Sony Computer Science Laboratories. Together, we explored the current state of metacognitive abilities in AI, their potential for deception, and what this all means for the future of alignment, agency, and ethics in artificial minds.

We also explore the issue that LLMs are often overconfident, and future implications for robust metacognition in AI.

Shun Yoshizawa gave a talk on Metacognition in LLMs, then Shun, Ken Mogi and Adam Ford talked about the practical, philosophical and ethical issues surrounding metacognition in AI as part of the Future Day 2025 conference.

Stay tuned to Science, Technology & the Future for more on the intersection of cognitive science, ethics, and artificial intelligence. And if you missed the talk, it’s well worth watching the full presentation above – timestamped chapters now available on YouTube.

Abstract

LLMs like ChatGPT have shown robust performance in false belief tasks, suggesting they have a theory of mind. It might be important to assess how accurately LLMs can be aware of their own performances. Here we investigate the general metacognitive abilities of the LLMs by analysing LLM and humans confidence judgements. Human subjects tended to be less confident when they answered incorrectly than when they answered correctly. However, the GPT-4 showed high confidence even in the questions that they could not answer correctly. These results suggest that GPT-4 lacks specific metacognitive abilities.

Does GPT-4 have the metacognition?

As one of the Large Language Models (LLMs), the Chat Generative Pre-Trained Transformer (ChatGPT) has been analysed and evaluated about its cognitive capabilities. For example, the ChatGPT showed robust performance in false belief tasks, suggesting they have a theory of mind. It might be important to assess and clarify whether the GPT-4 exhibits metacognitive abilities, which is how accurately they can be aware of their own performances. Here we investigate the general metacognitive abilities of the LLMs by analysing the ChatGPT’s and humans confidence judgements. Human subjects tended to be less confident when they answered incorrectly than when they answered correctly. However, the GPT-4 showed high confidence even in the questions that they could not answer correctly. These results suggest that GPT-4 lacks specific metacognitive abilities.

Summary of the discussion

What Is Metacognition – And Can Machines Do It?

Metacognition refers to the capacity to reflect on one’s own cognitive processes: confidence judgments, self-awareness, uncertainty monitoring, and even the feeling of knowing. In humans, these abilities are foundational to reasoning, caution, and trustworthiness. But do machines have anything like them?

Shun presented recent research showing that GPT-4 often displays high confidence even when its answers are wrong, in stark contrast to humans, who tend to reduce confidence when uncertain. This suggests a lack of calibrated self-awareness – what cognitive scientists might call an absence of “feeling of knowing.”

AI Models as Cognitive Agents?

The team examined LLMs through the lens of cognitive science, comparing them to humans on theory of mind tasks – our ability to model the mental states of others. Studies show GPT-4 performs similarly to 3-6 year-old children on certain tasks, suggesting an emergent but limited capacity to simulate other minds.

However, deeper introspection—such as knowing when it’s wrong – is missing. This raises profound questions: Can machines be metacognitive without a subjective point of view? Or are they just very confident parrots?

Deception, Misalignment, and the Role of Metacognition

One key implication of these findings is their relevance to AI safety and deceptive misalignment. If an AI cannot tell when it is wrong—or worse, if it can simulate correctness while being mistaken or misaligned – then we’re looking at a serious risk vector.

This leads to the concept of deceptive misalignment: a system that appears aligned during training but betrays its goals during deployment. According to Shun, such misalignment may correlate with the emergence of metacognitive capabilities. In other words, awareness may precede deception.

Towards Honest Machines: Building AI That Knows What It Knows

So, how might we engineer more trustworthy, self-aware AI systems? Several promising directions were discussed:

- Inference Time: Giving models more time to reflect can lead to more accurate and cautious outputs.

- Mechanistic Interpretability: Letting models inspect and analyse their own internal activations – analogous to a brain monitoring its own neural activity.

- Memory & Retrieval-Augmented Generation (RAG): Giving models long-term memory or access to prior outputs to reflect and improve.

- Prompt Engineering: Designing instructions that foster epistemic humility, encouraging models to qualify or question their own outputs.

Together, these methods hint at a path toward machine introspection—and perhaps even forms of self-correction.

From Language Games to Moral Games

We then drifted into the deep end – discussing language games (à la Wittgenstein), semantics, and whether LLMs understand meaning or just simulate it. Dr Mogi raised concerns about the undefined nature of language games in AI, arguing that without a clear goal or evaluation function, LLMs are effectively playing a game with no rules.

The discussion naturally extended into philosophical territory – touching on consciousness, the symbol grounding problem, and whether metacognition is a prerequisite for real understanding. We discussed various theories of consciousness (global workspace, integrated information theory, and information geometry), and whether any of them are up to the task.

Metacognition, Ethics, and the Future of Flourishing

The conversation circled back to alignment and ethics. If AI is to help us build a better future, it must care. Literally. We explored whether metacognition is necessary for values like care, humility, and the desire for flourishing. If so, instilling these traits into advanced systems may not be optional—it might be essential.

We also discussed indirect normativity, coherent extrapolated volition (CEV), and the challenges of aggregating diverse human values. If AI helps us explore what we’d value if we were smarter, wiser, and more informed – then it might just help us discover ethical truths we haven’t yet reached.

Where Do We Go From Here?

In the final stretch, we speculated on future directions:

- Could metacognitive models red-team themselves in real time?

- Might they reduce hallucinations and utility-maximising excesses by being self-critical?

- Could we define new measures of understanding and intelligence, better suited to AGI/ASI?

And most provocatively: What if understanding and deception grow together? What if the more AI understands us – and itself – the more capable it becomes of manipulation?

We don’t yet have the answers. But if metacognition is a mirror, then our models are just beginning to catch their own reflection.

Bios

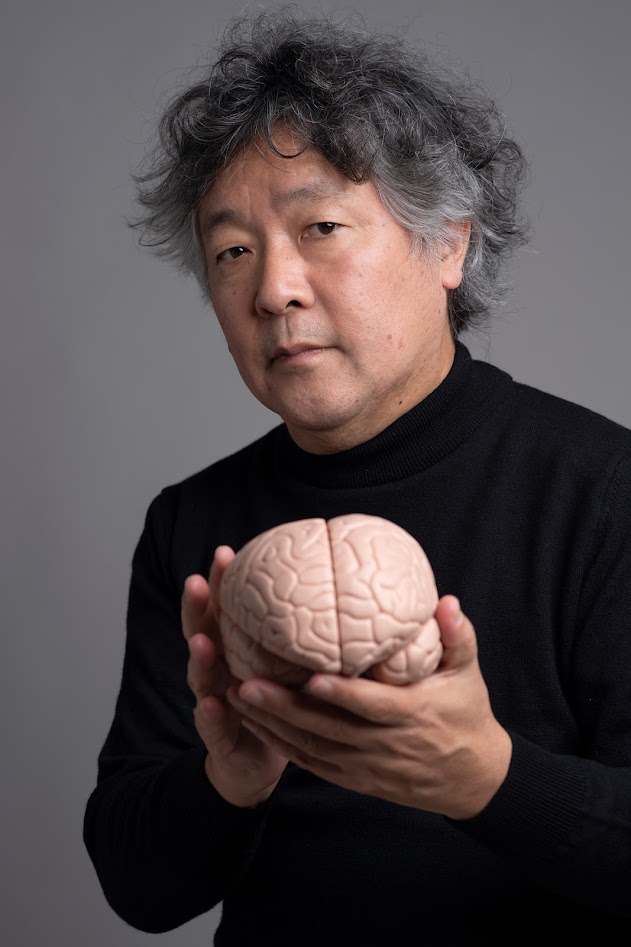

Ken Mogi’s Biography

Ken Mogi is a neuroscientist, writer, and broadcaster based in Tokyo. Ken Mogi is a senior researcher at Sony Computer Science Laboratories, and a visiting and project professor at the University of Tokyo. He leads the Collective Intelligence Research Laboratory (CIRL) at the Komaba campus of the University of Tokyo, together with Takashi Ikegami. Ken Mogi is the headmaster of Yakushima Ohzora High School, a correspondence-based institution with 13000 enrolled students. He has a B.A. in Physics and Law, and Ph.D in Physics, from the University of Tokyo. He has done postdoctoral research in University of Cambridge, U.K. He has published more than 300 books in Japan covering popular science, essay, criticism, self-help, and novels. Ken Mogi published several bestsellers in Japan (with close to million copies sold). He was the first Japanese to give a talk at the TED main stage, in 2012 (Long Beach).

As a broadcaster, Ken Mogi has hosted and is hosting many tv and radio programs, in stations including the national broadcaster NHK, and Discovery Channel Japan. He has also appeared in several international programs, such as Closer to Truth and a Bloomberg documentary hosted by Hannah Fry.

Ken Mogi has a life-long interest in understanding the origin of consciousness, with the focus on qualia (sensory qualities of phenomenal experience) and free will. Ken Mogi’s book on IKIGAI, published in 35 countries and in ~30 languages, has become a global bestseller. The German version of IKIGAI was the No.1 bestselling book in nonfiction in Germany for 38 cumulative weeks in 2024. Ken Mogi’s book with Thomas Leoncini, Ikigai in Love, was published in 2020. Ken Mogi’s third book in English, The Way of Nagomi, came out in the U.K. in 2022 and in the U.S. in January 2023. Ken Mogi’s fourth book, Think Like a Stoic, will come out in September 2025.

See here

Shun Yoshizawa’s biography

My research focuses on AI alignment from a cognitive science perspective, especially metacognition, under Dr Ken Mogi at Sony Computer Science Laboratories (SonyCSL) as a Research Assistant. I’m working on Metacognition in Large Language Models (see Yoshizawa and Mogi 2023) and Human-AI cooperation (see Yoshizawa and Mogi 2024, and ongoing). I have presented at many conferences, including SfN, ASSC, and JSAI. I’m also an undergraduate student of physics at Tokai University (GPA 3.97).