p(Doom) Watch – Different Experts Probabilities of Doom from AI

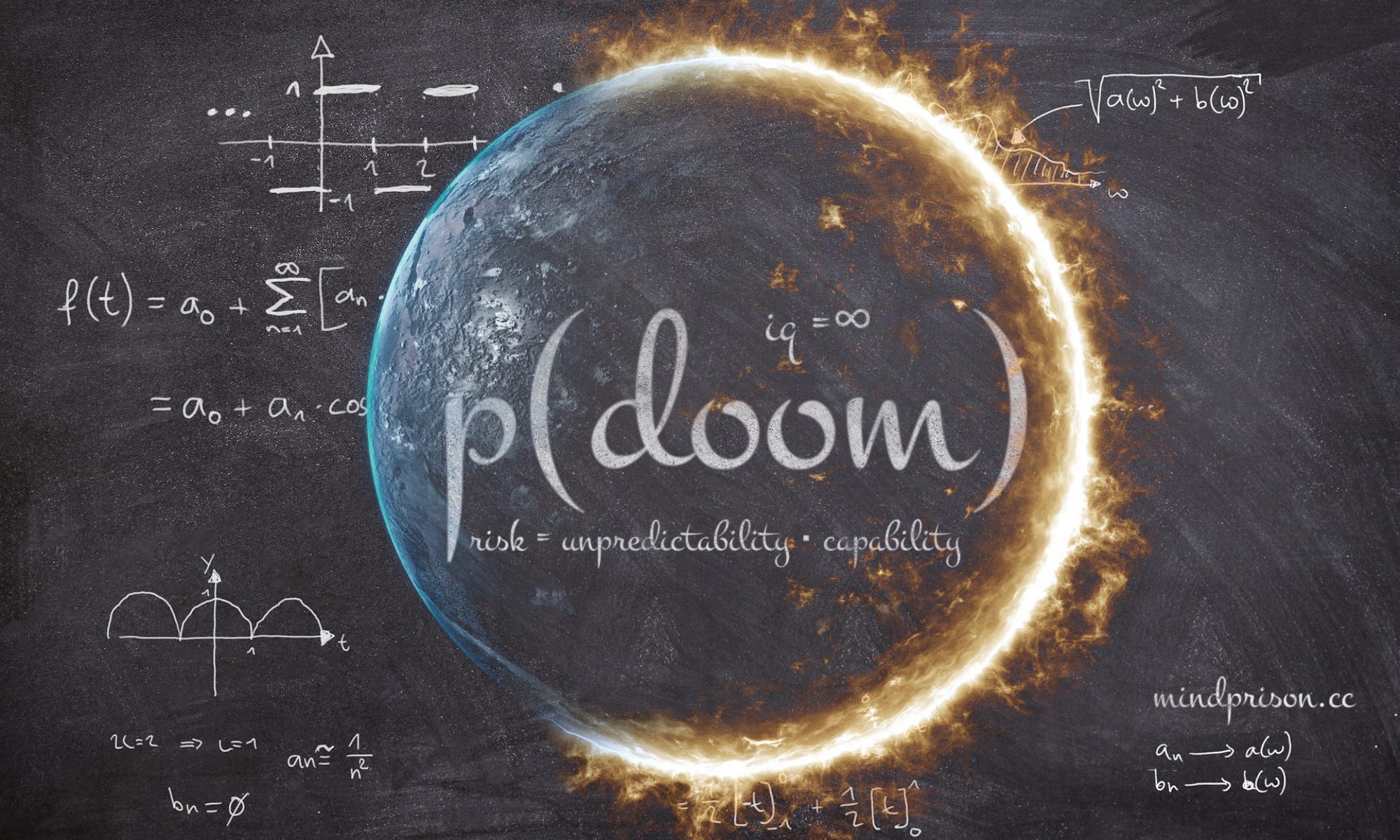

In the high-stakes world of Artificial Intelligence research, there is perhaps no metric more contentious than p(Doom), the probability assigned to catastrophic outcomes – including human extinction -resulting from advanced AI. Remarkably, the individuals seen as best positioned to understand this risk in profound disagreement. While many of the field’s pioneers, such as Turing Award winners Geoffrey Hinton and Yoshua Bengio, assign a credible and alarming risk of 10% to 20% or higher, another “Godfather,” Yann LeCun, derides the concern as effectively zero.

By examining the diverse p(Doom) estimates provided by leading researchers, forecasters, and industry CEOs – from the alarmists to the skeptics – we can unpack the core philosophical and technical divides that define the modern AI safety debate.

The estimates for the probability of AI Doom, often referred to as P(doom), vary significantly.

Some of these p(doom) estimates may be out of date.

In AI safety, P(doom) is the probability of existentially catastrophic outcomes (so-called “doomsday scenarios”) as a result of artificial intelligence. The exact outcomes in question differ from one prediction to another, but generally allude to the existential risk from artificial general intelligence.